Iren Earnings: What Happened and Why It Matters

Can AI Really Read Your Mind? The Numbers Say "Not Yet"

The promise of technology reading our minds has always been a tantalizing, if slightly unsettling, prospect. We're bombarded with headlines about AI breakthroughs, neural interfaces, and algorithms that can predict our behavior. But how much of this is genuine progress, and how much is just cleverly packaged hype? As someone who spent years sifting through financial data, I've learned to be skeptical of anything that sounds too good to be true.

The Signal-to-Noise Problem

The core issue with "mind-reading" AI isn't a lack of data; it's the overwhelming amount of noise. Our brains are incredibly complex, constantly firing off signals related to everything from our immediate surroundings to our deepest memories. Extracting a specific thought or intention from this cacophony is like trying to find a single drop of water in the ocean.

Most of the reported breakthroughs rely on correlating brain activity (measured through fMRI, EEG, or other methods) with specific stimuli or tasks. For instance, an AI might be trained to identify patterns in brain scans when a person looks at a picture of a cat versus a dog. But that's a far cry from reading spontaneous, unstructured thoughts. The signal-to-noise ratio is simply too low for reliable, real-world applications. And this is the part of the report that I find genuinely puzzling; the leap from controlled experiments to generalized mind-reading seems, at best, optimistic.

Furthermore, even in controlled experiments, the accuracy rates are often misleading. A system might claim 90% accuracy in identifying a cat versus a dog, but what does that really mean? If the AI is simply picking up on basic visual processing differences, it's not actually "reading" the subject's mind; it's just detecting the presence of certain visual features. This is a crucial distinction that often gets lost in the media frenzy.

The Ethics of Interpretation

Beyond the technical limitations, there's a significant ethical dimension to consider: the interpretation of brain data. Even if we could accurately decode brain activity, what would we do with that information? Who would have access to it, and how would it be used? The potential for misuse is staggering, from targeted advertising to political manipulation to outright coercion. And let's be honest, the tech industry's track record on data privacy isn't exactly reassuring (the Cambridge Analytica scandal still stings).

Details on data security protocols remain scarce, but the impact of a potential breach is clear. Imagine a world where your innermost thoughts are vulnerable to hacking or surveillance. It's a dystopian nightmare scenario that we need to take seriously.

The human brain is not a simple binary switch that can be easily interpreted. It is a constantly changing, complex organ. The "mind-reading" AI is more like interpreting tea leaves. There may be some truth to it, but there is a whole lot of guesswork and imagination involved.

So, What's the Real Story?

While the idea of AI reading our minds is captivating, the reality is far more complex and less advanced than the headlines suggest. The technical hurdles are significant, and the ethical implications are profound. We need to approach this technology with a healthy dose of skepticism and a clear understanding of its limitations. Only then can we have a rational conversation about its potential benefits and risks.

Related Articles

GALA: Tracee Ellis Ross and the "Push Humanity Forward" Nonsense

Another Pat on the Back for... Doing Their Job? So, the Ebony Power 100 Gala happened. Big deal. Ano...

Anthropic AI: What It Is and Why You Should Probably Be Skeptical

Alright, let's get this straight. Another day, another "revolutionary" AI breakthrough promising to...

Palantir Stock (PLTR): The Hype, The Price, and Why It's Not Nvidia

So, let me get this straight. The U.S. Army hands a nine-figure contract to the tech-bro darlings of...

Brooke Rollins: Who She Is and What She's Really After

So, the U.S. Secretary of Agriculture just announced a "top national security priorotiey" on X, the...

11.11: The Moment That Reimagines Our Tomorrow

The Dawn of a New Human Story: Why Our Future Just Got Personal Sometimes, you stumble upon an idea,...

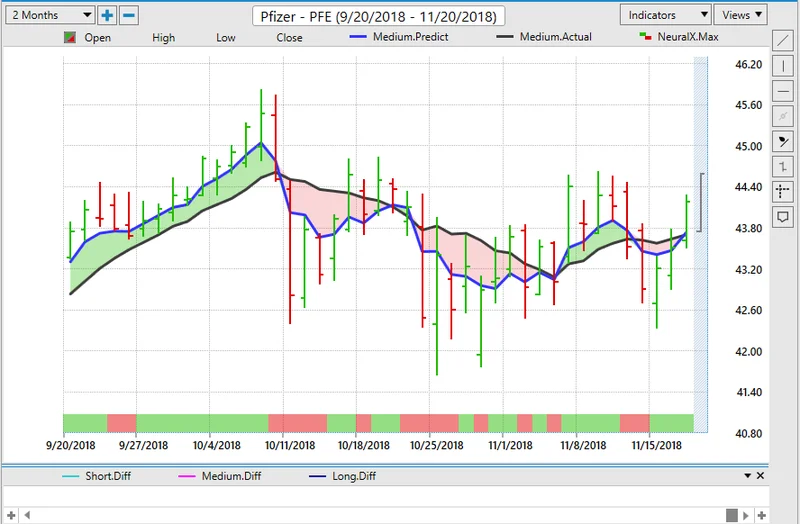

Pfizer's Next Chapter: The Biotech Revolution We've Been Waiting For

You’ve seen it. I’ve seen it. We’ve all been there. You’re deep in a flow state, chasing a thread of...